random-search- Hyperparameters exploration¶

This functionality trains a random model with hyperparameters sampled from a predefined space.

The hyperparameter space is defined in a random_search.toml file that must be manually filled

by the user. Some of its variables are mandatory.

This file contains the fixed values or intervals / sets to sample from. The sampling function are the following:

choicesamples one element from a list,uniformsamples from a uniform distribution over the interval [min,max],exponentdrawsxfrom a uniform distribution over the interval [min,max] and return 10-x.randintreturns an integer in [min,max].

The values of some variables are also fixed, meaning that they cannot be sampled and

that they should be given a unique value.

The values of the variables that can be set in random_search.toml correspond to

the options of the train function. Values of the

Computational resources section can also be redefined using the command line.

Some variables were also added to sample the architecture of the network.

Prerequisites¶

You need to execute the clinicadl tsvtool getlabels

and clinicadl tsvtool {split|kfold} commands

prior to running this task to have the correct TSV file organization.

Moreover, there should be a CAPS, obtained running the t1-linear pipeline of ClinicaDL.

Running the task¶

This task can be run with the following command line:

clinicadl random-search [OPTIONS] LAUNCH_DIRECTORY NAME

LAUNCH_DIRECTORY(Path) is the parent directory of output folder containing the filerandom_search.toml.NAME(str) is the name of the output folder containing the experiment.

Content of random_search.toml¶

random_search.toml must be present in launch_dir before running the command.

Mandatory variables:

network_task(str) is the task learnt by the network. Must be chosen betweenclassificationandregression(random sampling forreconstructionis not implemented yet). Sampling function:fixed.caps_directory(str) is the input folder containing the neuroimaging data in a CAPS hierarchy. Sampling function:fixed.preprocessing_json(str) corresponds to the JSON file produced byclinicadl extractused for the search. Sampling function:fixed.tsv_path(str) is the input folder of a TSV file tree generated byclinicadl tsvtool {split|kfold}. Sampling function:fixed.diagnoses(list of str) is the list of the labels that will be used for training. Sampling function:fixed.epochs(int) is the maximum number of epochs. Sampling function:fixed.n_convblocks(int) is the number of convolutional blocks in CNN. Sampling function:randint.first_conv_width(int) is the number of kernels in the first convolutional layer. Sampling function:choice.n_fcblocks(int) is the number of fully-connected layers at the end of the CNN. Sampling function:randint.

Optional variables:

- Architecture hyperparameters

channels_limit(int) is the maximum number of output channels that can be found in the CNN. Sampling function:fixed. Default:512.d_reduction(str) is the type of dimension reduction applied to the feature maps. Must include onlyMaxPoolingorstride. In this case the dimension is reduced by having a stride of 2 in one convolutional layer per convolutional block. Sampling function:choice. Default:MaxPooling.n_conv(int) is the number of convolutional layers in a convolutional block. It is sampled independently for each convolutional block. Sampling function:randint. Default:1.network_normalization(str) is the type of normalization performed after convolutions. Must include onlyBatchNorm,InstanceNormorNone. Sampling function:choice. Default:BatchNorm.

- Computational resources

--gpu/--no-gpu(bool) forces using GPU / CPUs. Default behaviour is to try to use a GPU and to raise an error if it is not found.--n_proc(int) is the number of workers used by the DataLoader. Default value:2.--batch_size(int) is the size of the batch used in the DataLoader. Default value:2.--evaluation_steps(int) gives the number of iterations to perform an evaluation internal to an epoch. Default will only perform an evaluation at the end of each epoch.

- Data management

baseline(bool) allows to only load_baseline.tsvfiles when set toTrue. Sampling function:choice. Default:False.data_augmentation(list of str) is the list of data augmentation transforms applied to the training data. Must be chosen in[None,Noise,Erasing,CropPad,Smoothing,Motion,Ghosting,Spike,BiasField,RandomBlur,RandomSwap]. Sampling function:fixed. Default:False.unnormalize(bool) is a flag to disable min-max normalization that is performed by default. Sampling function:choice. Default:False.sampler(str) is the sampler used on the training set. It must be chosen in [random,weighted]. Sampling function:choice. Default:random.

- Cross-validation arguments

--n_splits(int) is a number of splits k to load in the case of a k-fold cross-validation. Default will load a single-split.--split(list of int) is a subset of splits that will be used for training. By default, all splits available are used.

- Optimization parameters

learning_rate(float) is the learning rate used to perform weight update. Sampling function:exponent. Default:4(leading to a value of1e-4).wd_bool(bool) usesweight_decayifTrue, else weight decay of Adam optimizer is set to 0. Sampling function:choice. Default:True.weight_decay(float) is the weight decay used by the Adam optimizer. Sampling function:exponent, conditioned bywd_bool. Default:4(leading to a value of1e-4).dropout(float) is the rate of dropout applied in dropout layers. Sampling function:uniform. Default:0.0.patience(int) is the number of epochs for early stopping patience. Sampling function:fixed. Default:0.tolerance(float) is the value used for early stopping tolerance. Sampling function:fixed. Default:0.0. "accumulation_steps": 1

- Transfer learning

--transfer_path(str) is the path to a result folder (output ofclinicadl train). The best model of this folder will be used to initialize the network as explained in the implementation details. If nothing is given then the initialization will be random.--transfer_selection_metric(str) corresponds to the metric according to which the best model oftransfer_pathwill be loaded. This argument will only be taken into account if the source network is a CNN. Choices arebest_lossandbest_balanced_accuracy. Default:best_balanced_accuracy.

Outputs¶

Results are stored in the results folder given by launch_dir, according to

the following file system:

<launch_dir>

├── random_search.toml

└── <name>

Example of setting¶

In the following we give an example of a random_search.toml file and

two possible sets of options that can be sampled from it.

random_search.toml¶

[Random_Search]

# Mandatory args

caps_directory = "/path/to/caps"

tsv_path = "/path/to/tsv"

preprocessing_json = "extract_image.json"

network_task = "classification"

n_convblocks = [2, 6]

first_conv_width = [4, 8, 16, 32]

n_fcblocks = [1, 3]

# Options

channels_limit = 64

d_reduction = ["MaxPooling", "stride"]

network_normalization = ["None", "BatchNorm"]

wd_bool = [true, false]

[Architecture]

dropout = [0, 0.9]

[Data]

diagnoses = ["AD", "CN"]

[Optimization]

epochs = 100

learning_rate = [2, 5]

weight_decay = [2, 4]

Options #1¶

{"mode": "image",

"network_task": "classification",

"caps_directory": "/path/to/caps",

"preprocessing_dict": {...},

"tsv_path": "/path/to/tsv",

"diagnoses": ["AD", "CN"],

"baseline": false,

"unnormalize": false,

"data_augmentation": false,

"sampler": "random",

"epochs": 100,

"learning_rate": 5.64341e-4,

"wd_bool": true,

"weight_decay": 4.87963e-3,

"patience": 20,

"tolerance": 0,

"accumulation_steps": 1,

"evaluation_steps": 0,

"dropout": 0.06792280327917641,

"network_normalization": null,

"convolutions":

{"conv0": {"in_channels": 1, "out_channels": 8, "n_conv": 2, "d_reduction": "MaxPooling"},

"conv1": {"in_channels": 8, "out_channels": 16, "n_conv": 3, "d_reduction": "MaxPooling"},

"conv2": {"in_channels": 16, "out_channels": 32, "n_conv": 2, "d_reduction": "MaxPooling"},

"conv3": {"in_channels": 32, "out_channels": 64, "n_conv": 1, "d_reduction": "MaxPooling"},

"conv4": {"in_channels": 64, "out_channels": 64, "n_conv": 1, "d_reduction": "MaxPooling"}},

"fc":

{"FC0": {"in_features": 16128, "out_features": 180},

"FC1": {"in_features": 180, "out_features": 2}}

}

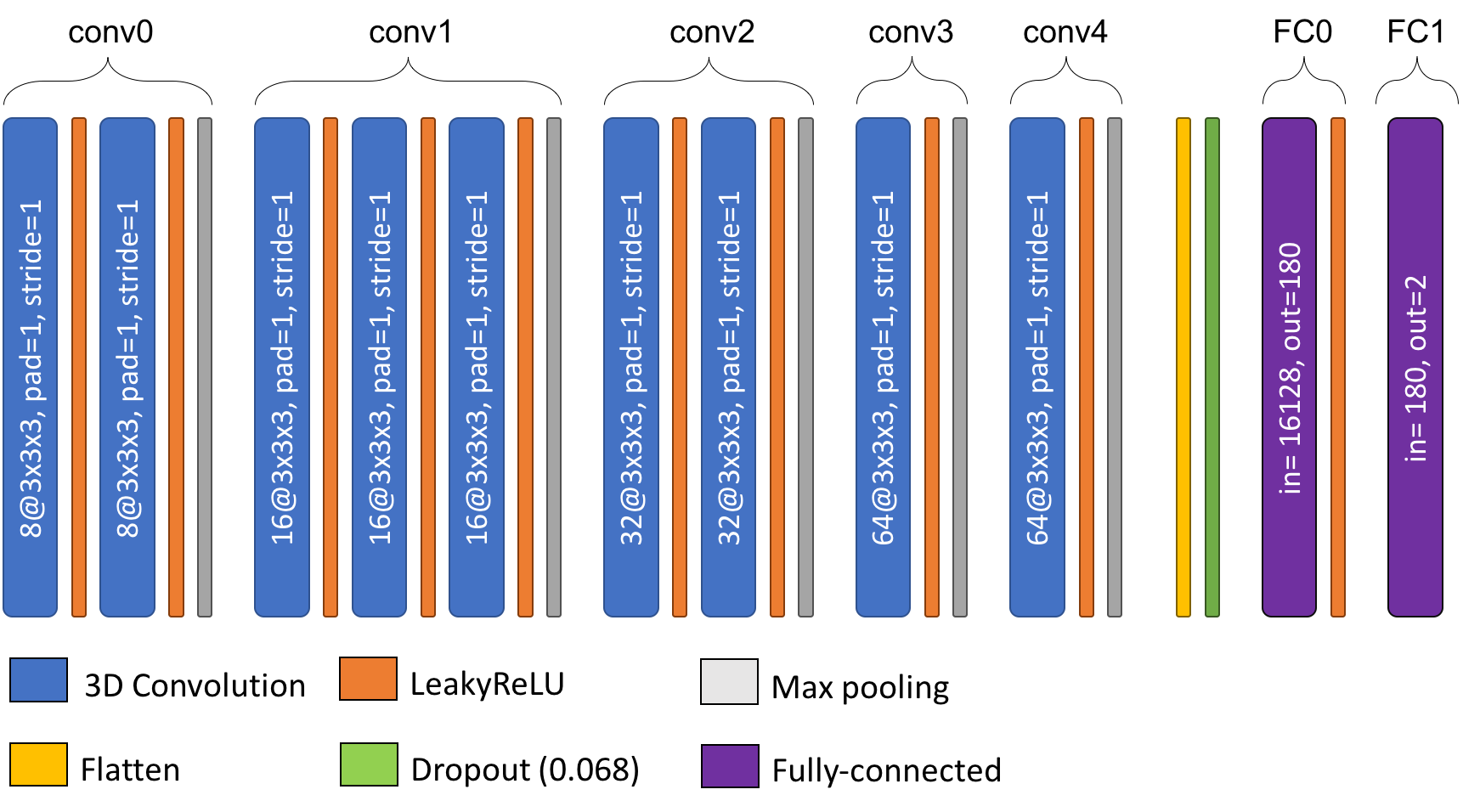

In this case weight decay is applied, and the values for the hyperparameters of the architecture are the following:

"n_convblocks": 5,

"first_conv_width": 8,

"channels_limit": 64,

"d_reduction": "MaxPooling",

"n_fcblocks": 2

n_conv is sampled independently for each convolutional block, leading to a different

number of layers for each convolutional block, described in the conv dictionary.

The number of features in fully-connected layers is computed such as the ratio between

each layer is equal (here 16128 / 180 ≈ 180 / 2).

The scheme of the corresponding architecture is the following:

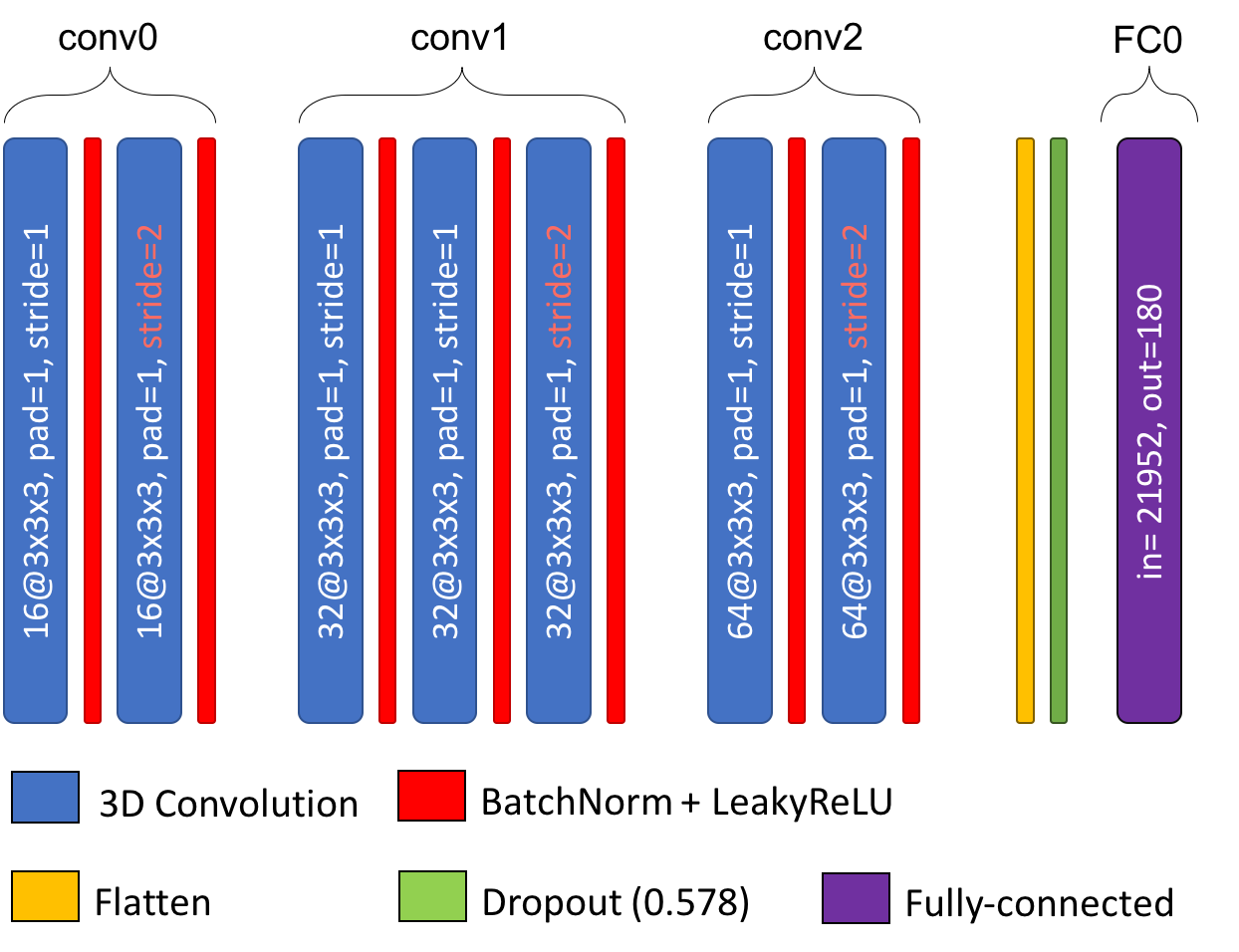

Options #2¶

{"mode": "image",

"network_task": "classification",

"caps_directory": "/path/to/caps",

"preprocessing_dict": {...},

"tsv_path": "/path/to/tsv",

"diagnoses": ["AD", "CN"],

"baseline": false,

"unnormalize": false,

"data_augmentation": false,

"sampler": "random",

"epochs": 100,

"learning_rate": 7.09740e-3,

"wd_bool": false,

"weight_decay": 4.09832e-4,

"patience": 20,

"tolerance": 0,

"accumulation_steps": 1,

"evaluation_steps": 0,

"dropout": 0.5783296483092104,

"network_normalization": null,

"convolutions":

{"conv0": {"in_channels": 1, "out_channels": 16, "n_conv": 2, "d_reduction": "stride"},

"conv1": {"in_channels": 16, "out_channels": 32, "n_conv": 3, "d_reduction": "stride"},

"conv2": {"in_channels": 32, "out_channels": 64, "n_conv": 2, "d_reduction": "stride"}},

"fc":

{"FC0": {"in_features": 21952, "out_features": 2}}

}

In this case weight decay is not applied, and the values for the hyperparameters of the architecture are the following:

"n_convblocks": 3,

"first_conv_width": 16,

"channels_limit": 64,

"d_reduction": "stride",

"n_fcblocks": 1

n_conv is sampled independently for each convolutional block, leading to a different

number of layers for each convolutional block, described in the conv dictionary.

The scheme of the corresponding architecture is the following: